Is neural storytelling a mature opportunity for content creators?

Three years ago, agency Vertical Leap looked into the then-future of neural storytelling. Now Chris Pitt imagines the future role humans will have in AI content creation thanks to rapid advances in deep learning.

How can AI aid media storytelling without posing a risk to society? / Lenin Estrada via Unsplash

Artificial intelligence (AI) never seems to not be in the news, with constant enhancements to the technology making headlines. Increasingly, AI algorithms are writing news stories, creating works of art and generating video content for commercial purposes.

We’re not quite at the point where AI is writing and directing its own movies yet, but the prospect seems more plausible with every year that passes. This raises a lot of questions for marketers and other storytellers about the role that smarter AI algorithms will play in the near future, and how best to use them.

Advertisement

Successful experiments in neural storytelling

The days of AI writing articles for major publications are already here. In September 2020, The Guardian published an article eerily titled: ‘A robot wrote this entire article. Are you scared yet, human?’ The British publication was neither the first nor the last to publish AI-written content, with the likes of The Wall Street Journal and Lifehacker among others to publish journalism penned by machines.

We’re now at a point where algorithms can write articles and even create imagery for them. These articles were all written using OpenAI’s GPT-3 system.

With so many articles written by AI, it would be easy to think the technology is ready to take over the helm from professional journalists, but all is not as it seems. As explained by Ben Dickinson in his teardown for TechTalks, The Guardian’s GPT-3-written article can easily give readers the wrong impression about AI.

With so many articles written by AI, it would be easy to think the technology is ready to take over the helm from professional journalists, but all is not as it seems. As explained by Ben Dickinson in his teardown for TechTalks, The Guardian’s GPT-3-written article can easily give readers the wrong impression about AI.

In reality, creating an article with GPT-3 requires a journalist or editor to provide a topical prompt or title and write an introduction for the model to work with. From here, the algorithm essentially pieces together sentences from the web to produce 500 words of (hopefully) relevant content. In the case of The Guardian’s article, the publication repeated this process eight times and edited down the results into the most coherent version possible.

Advertisement

The marketing benefits of smarter machines

AI powers some of the most sophisticated predictive analytics systems in the industry, and the technology has a lot to offer marketing teams as it continues to mature. Neural networks may not be able to write full, coherent articles by themselves, but they’re already providing basic customer support and automating lead generation with AI chatbots.

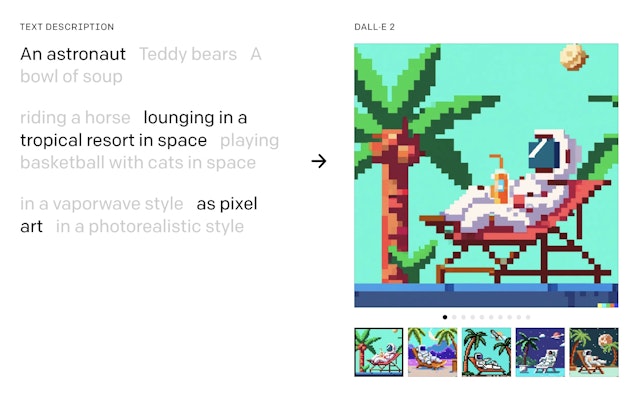

We’ve touched on its potential to generate images and visual content. OpenAI, the company behind GPT-3, also has its own solution for this called Dall-E 2.

Again, the system requires users to input descriptions and artistic styles, much like GPT-3. Operationally, the algorithm is essentially doing the same thing as GPT-3 too, except it’s generating images by combining elements from images across the whole web.

The algorithm itself isn’t a breakthrough (no more than GPT-3), but it illustrates how the technology is more applicable to some tasks than others, such as the subjective qualities of visual media rather than the precise meaning of words.

Suggested newsletters for you

The pitfalls and risks of AI-generated content

Data scientists have a responsibility to recognize potential risks AI may pose, and so do marketers or anyone else using the technology for commercial purposes. We’ve seen plenty of AI systems melt down in recent years with varying consequences.

Microsoft has had its fair share of PR blushes, namely when its Tay chatbot started spewing Nazi propaganda for the whole world to see. It was embarrassing for Microsoft, but it highlighted the dangers of bias, misinformation and other factors working their way into algorithms.

This is particularly concerning in a society already struggling with disinformation and misinformation. Today, we’re talking about algorithms writing stories, but bigger discussions are already taking place about the risk of deepfakes sparking nuclear wars.

Every year, AI technology becomes capable of saying more, and saying it more convincingly. The danger is it has no understanding of what it’s saying or the potential implications its words can have on people or society in general.

AI holds great potential for marketers and content creation but, as with any tool, it’s vital that the people using it understand its capabilities, risks and limitations – and, above all, how to use it safely.

Content by The Drum Network member:

Vertical Leap

We are an evidence-led search marketing agency that helps brands get found online, drive qualified traffic to their websites and increase conversions/sales.

To...