Nine most common SEO audit issues revealed

Website audits are a crucial part of any SEO strategy, flagging up technical issues before they cause too much damage to your search ranking. As a website grows, it naturally develops complications and some issues are more common than others.

Vertical Leap provide marketers with a guide for auditing their website and a free SEO health check. / Myriam Jessier

After running hundreds of SEO audits for our customers, our data reveals the nine most common issues that constantly arise.

Bad, broken and missing links

It’s no secret that links are one of the most important ranking factors in Google’s search algorithm. There are five types of link problems, in particular, that we see a lot.

-

Low-quality links: any inbound link from third-party websites that aren’t adding value to your search ranking and – in some cases – significantly hurt it.

-

Broken links: this is especially common when image file paths are changed in CMS platforms like WordPress or inadvertently changing the URL of a post after publishing.

-

Missing internal links: missed opportunities to link to your most important pages from other, relevant pages on your website (most commonly, blog posts).

-

Non-secure links: this is especially common when you miss links while migrating from HTTP to HTTPS.

-

Dead links: old links pointing to pages or files that are no longer on your site.

Thin or duplicate content

Google tells us that content quality is the most important ranking factor but quality is notoriously difficult to quantify. So the search giant has to rely on other signals like inbound links, user experience and performance metrics to try and determine the quality of every page.

There are certain aspects of content quality that can be quantified, though, and the two most common problems are:

-

Thin content: pages that lack enough content to justify having a dedicated page.

-

Duplicate content: multiple pages with the same or very similar content.

There are certain scenarios where pages can legitimately keep the content light, such as contact pages or indexed login pages. Google is capable of understanding when thin content is justified - as long as you’ve got the technical basics covered.

Duplicate content, on the other hand, is harder to justify but also more difficult to avoid in some cases, such as product pages where multiple versions of the same or similar products share the same specs or features, making it difficult to write unique descriptions.

Page titles

Page titles are one of the strongest on-page signals, providing key information about the content on your pages to both users and search engines. Here are the most common problems that crop up in our audits:

-

Page titles are missing

-

Too general or vague

-

Not relevant to the content

-

Too long to show in the SERPs

The most serious page title issues involve deceptive or dishonest titles, click-bait tactics, or titles that simply aren’t compelling enough for anyone to click.

Meta descriptions

Meta descriptions provide users with important context about the content on the page, helping them to choose which link to click. They are also a chance to make your listing stand out on the page.

Amazingly, the most common mistake we see is that meta descriptions are missing altogether, which forces Google to simply show the opening sentence of your page. The other issue is that they are either too long to show in organic listings or too short to provide valuable context.

No sitemap in robots.txt

You want to include a link to your sitemap in your robot.txt file to help search bots understand the architecture of your website but our audits often find this is missing.

Missing or incorrect use of tags

More than half of the entire web uses content management systems (CMS) and a key benefit of these platforms is that you can type things like page titles and meta descriptions into text fields without marking up any of the tags they use.

So, even with no understanding of SEO, WordPress users are unlikely to leave the page title field empty before publishing a new page but other tags can still be problematic, even when using a CMS.

We commonly find the following tags are missing or misused when we run an audit:

-

Heading tags: missing tags, incorrect use of different heading tags or heading text that is too long.

-

Hreflang tags: implementation problems on multilingual or regional websites, ranging from incorrect language codes and missing return links to broken links and more.

-

Canonical tags: missing or incorrect canonical tags, which can result in duplicate content being crawled and indexed.

Pages with slow load times

Loading times are still one of the most common issues flagged up in SEO audits and the search penalty for page speed is about to get heavier.

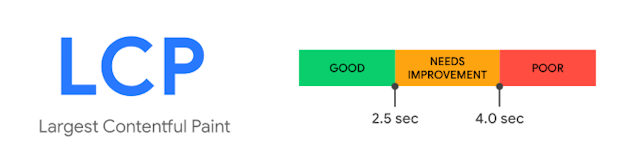

Google is set to roll out a new page experience signal in May this year, which includes a new signal, measurement and benchmark for loading times, as part of the Core Web Vitals initiative.

Pages that fail to load in 2.5 seconds or less after the update will miss out on a small ranking boost, meaning pages that take too long to load could fall down the SERPs as faster pages climb above them.

Images aren’t optimised

As a general rule, you want to make sure images are no larger than 1mb and it’s worth compressing images as much as you can without sacrificing quality based on the viewing experience you’re required to deliver (you almost certainly don’t need 4K resolution images).

The other common issue we find with images is that alt-text is missing. Screen readers use this information to describe images for vision-impaired users so missing alt-text is a big accessibility problem. Google also uses this information to rank and deliver image results for relevant searches.

Missing pages and redirects

As a website grows, pages are inevitably moved or removed and, in some cases, you could migrate your entire site to a different location. When this happens, there’s a good chance you’ll have inbound links pointing to pages that have been moved to a new URL or removed entirely.

In this case, you want to redirect users to the new version of your page or the most relevant page to the topic/anchor text they click, if the page is missing entirely. You need to use 301 redirects to tell search engines and users that this page has been moved permanently.

Never use 301 redirects if you plan to use the URL in question again in the future and only ever apply one 301 redirect to a URL – don’t set up redirect chains that run through multiple URLs.

Something else we see in our audits is default 404 pages that haven’t been optimised. Create custom 404 pages with links included to relevant parts of your website so that users are directed back to your site when they mistype a URL.

Sally Newman, SEO specialist at Vertical Leap

Content by The Drum Network member:

Vertical Leap

We are an evidence-led search marketing agency that helps brands get found online, drive qualified traffic to their websites and increase conversions/sales.

To...