AI and ethics: Time to talk about responsibility

It is easy to understand who is responsible for airline safety. Although Artificial Intelligence (AI) is a new phenomenon, the structure of responsible parties is very similar to air traffic control.

In the cycle of responsibility the duty of the AI product company is to make sure the tools are high quality and follow high eth

Defining who is in charge of artificial intelligence has prompted much discussion. Experts agree that legislation lays the foundation for the ethical use of AI. In regulation, AI should be seen like any other automated system. Contrary to common fears, there is nothing magical in the skills of AI, at least in the predictable future. Currently, machine learning is the most advanced form of AI. Despite its powerful abilities, ML does not invent anything creative or new that does not already exist in the data provided. The safety issues of AI lie mostly in the collection of personal data and the technologies that have implications for physical interactions with humans, such as machines, cars or aircraft.

A frequent question is: Who is responsible for the resulting actions of an AI?

AI can simply be compared to the airline industry, which is familiar to us all and is well-regulated due to the obvious safety issues surrounding it. But who is responsible for an aeroplane to fly safely from A to B? First of all, the users of the aircraft the pilots, engineers, cabin crew, airport staff – each working in their own area of responsibility and expertise. Secondly, the manufacturer of the aircraft. The leading airline companies have professionals in each different aspect of aeroplane building and have lengthy experience in developing high-quality products.

AI is not much different. It is a technology built by humans, just as aeroplanes are. Most AI applications do not currently represent a lot of physical risk for humans, mostly due to the still-limited application areas of machine learning compared to all technology which runs on CPUs.

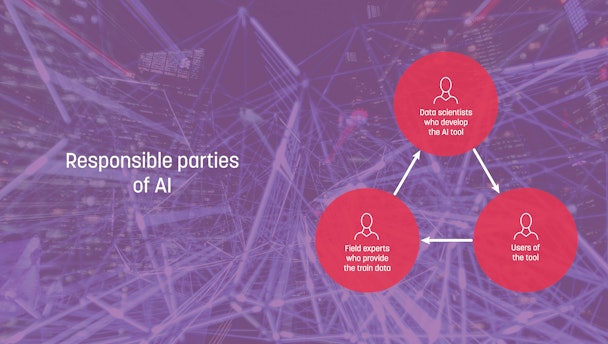

The responsibility of AI decision making should work like this: legislators and authorities should define the boundaries for collecting and using data, and what kind of certificates are needed to be eligible to produce AI tools. Just as they would regulate any other tools or products that significantly affect human lives. For AI, there are three responsible parties:

1. The field experts who perform their profession and at the same time provide training data for machine learning based AI.

2. The AI model and product developers, who need professional skills in understanding how different algorithms behave on different kinds of data and in different types of AI products.

3. The users of the AI tool who should follow the user instructions in order to get the expected behaviour of the AI decision-making system.

Utopia Analytics is a text analytics company which builds machine learning tools for all the natural languages in use on the planet. In the cycle of responsibility the duty of the AI product company is to make sure the tools are high quality and follow high ethical standards. For transparency, Utopia has created and published a framework for ethical use of AI. It emphasizes common principles such as human-first, comprehensibility, non-discrimination and human dignity which are put into action together with the customer.

One of Utopia’s products is Utopia AI Moderator, which can moderate comments, messages, user profiles, user names, images, videos, sales ads, online reviews – any kind user generated content and in any language. It is used on news sites, online marketplaces, in-game chats, review sites, social media services, as well as insurance and customer service departments.

Utopia gives its customers the privilege and responsibility to define their own moderation policies on their platforms. Otherwise, there would be too much power applied from one company. Since the launch of the Utopia AI Moderator in 2016, they have collaborated with their customers to be responsible together: The customer’s field experts provide the moderation policy and use the tool, and Utopia keeps the AI high quality for the customers data. As far as regulation is concerned, besides the normal commercial terms, Utopia’s contracts also state that if a party breaches the United Nation Universal Declaration of Human Rights, all cooperation will be terminated. That way both parties work together in contributing to better ethics and towards a better future for humans with the help of AI tools.

Utopia Analytics is holding a panel debate moderated by The Drum at the Finnish Ambassador’s Residence in London on March 18th from 2.30.

Utopia Analytics is holding a panel debate moderated by The Drum at the Finnish Ambassador’s Residence in London on March 18th from 2.30.

Edit: Due to the growing coronavirus situation this event has been postponed. However you can register your interest here and an update will be provided in due course: https://www.eventbrite.co.uk/e/utopia-analytics-generation-alpha-can-ai-educate-better-humans-tickets-90303870307