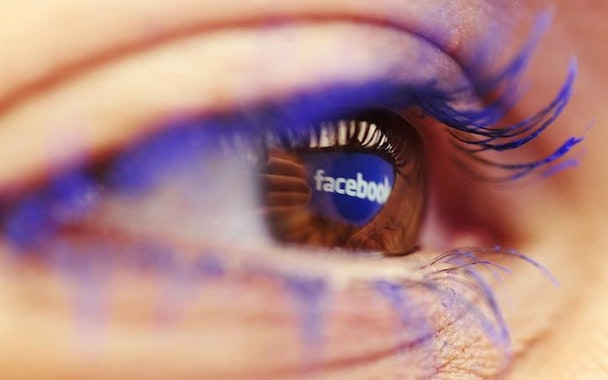

Facebook is now using AI to help counter the spread of terrorist content

Facebook is now using artificial intelligence (AI), and building a team of security experts, as well as increasing cooperation with third parties to help prevent militant groups from using the social network to communicate between themselves and with the outside world.

Media and tech companies have faced criticism from governments over their perceived inaction over the spread of militant content

In a blog post entitled, “Hard Questions: How We Counter Terrorism”, published today (June 15), Facebook claimed it was experimenting with AI to become “better at identifying potential terrorist content”, growing its team of security experts, plus “strengthening partnerships with other companies, governments and NGOs in this effort".

The post, attributed to Facebook’s Monika Bickert, director of global policy management, and Brian Fisherman, counterterrorism policy manager, explains how the social network’s team of security experts is using AI, including image recognition and semantic classification software, to identify content supporting paramilitary groups.

“We remove terrorists and posts that support terrorism whenever we become aware of them. When we receive reports of potential terrorism posts, we review those reports urgently and with scrutiny,” reads the post.

“And in the rare cases when we uncover evidence of imminent harm, we promptly inform authorities. Although academic research finds that the radicalization of members of groups like ISIS and Al Qaeda primarily occurs offline, we know that the internet does play a role — and we don't want Facebook to be used for any terrorist activity whatsoever.”

It claims the use of AI, albeit a recent development in its efforts, is already paying dividends with the move an indication of its wider efforts to improve its efficiency when it comes to censoring such material, according to the pair.

“We're currently experimenting with analyzing text that we've already removed for praising or supporting terrorist organizations such as ISIS and Al Qaeda so we can develop text-based signals that such content may be terrorist propaganda. That analysis goes into an algorithm that is in the early stages of learning how to detect similar posts. The machine learning algorithms work on a feedback loop and get better over time,” reads the text.

This initiative includes efforts to identify “repeat offenders” when it comes to those open Facebook accounts that publicly support causes such as militant jihadism, are subsequently expelled from the social network, but then attempt to repeat said activity but under a pseudonym.

The announcement of the latest moves come amid pressure on Facebook, plus other messaging platforms, to take more responsibility when it comes to curbing the spread of such content from politicians, with the post underlining its efforts to work with Microsoft, Twitter and YouTube to do so.

Digital media and tech companies have faced criticism (as well as legal tussles) over their use of encryption software, which makes it difficult for government authorities to monitor the communications between groups they deem to be extremist.

Bickbert and Fisherman’s post goes on to advocate the use of such technologies, given it also protects the privacy of law abiding users, and that it does attempt to support law enforcement.

“Because of the way end-to-end encryption works, we can’t read the contents of individual encrypted messages — but we do provide the information we can in response to valid law enforcement requests, consistent with applicable law and our policies,” reads the post.

The post concludes: “We want Facebook to be a hostile place for terrorists. The challenge for online communities is the same as it is for real world communities – to get better at spotting the early signals before it's too late. We are absolutely committed to keeping terrorism off our platform, and we’ll continue to share more about this work as it develops in the future.”